Main QA points for delivering high-quality SaaS-based solutionsTesting

Main QA points for delivering high-quality SaaS-based solutionsTesting

SaaS testing is the process of conducting a test case on an on-demand software or web-based software system. Software testing as a service is different from testing on-premises applications because SaaS-based application testing requires access to browsers and is centered around web application testing methods.

The software tests robust SaaS performance testing plans against real-world traffic in a cloud environment to confirm that the service is available, useful, and optimized for all web concurrent users at all times. By adhering to the best practices of SaaS testing, your team can quickly deploy updates and upgrades, increase ROI, and increase user satisfaction.

SaaS-Based Solutions: 4 Reasons to Testing

Reason 1. Smart scalability

The option to change software capabilities immediately upon request allows tenants to save costs on using cloud services. What's more, SaaS vendors use auto-scaling mechanisms that diagnose the amount of current users and adjust the software according to sizing needs.

Reason 2. Regular and rapid updates

Within the tight relationship with the SAS provider, the shortcomings and modifications of all solutions go through it. As a rule, the process of correcting errors and making changes is quick and frequent. Therefore, a robust QA strategy should be defined to optimize the snowfall of test scenarios on short notice.

Reason 3. Multi-tenancy

SaaS opportunities to use shared cloud resources make it affordable for a range of different organizations and streamline software support. Within the approach of providing access to multiple customers, each tenant's data is different and remains invisible to other subscribers. However, the sheer number of connections with a vendor can cause difficulties in compatibility and integration. In this case, improving the quality of the API may be the escape solution.

Reason 4. Adjustable architecture

One more reason why companies choose SaaS is the ability to customize and specify settings that perfectly match the needs of the business. And this requires thorough supervision, as improper operation of the IT solution can lead to defects after adding some modifications that can exacerbate the increased churning rate.

Therefore, in these specifications, SaaS testing is more complex than testing cloud and on-premises apps, which gathers more demand and a more in-depth attitude towards QA activities.

Now Let's see main points to get Upscale SaaS-Based Solutions

1. Functional testing

Testing all levels of connections between IT product components, including units, their integration and system testing, QA experts check the proper management of efficiency. Notably, the general requirements include numerous cases corresponding to casual user scenarios. Checking numerous configuration combinations makes testing more complete.

2. Performance testing

While on-premises applications are based on the user's environment, the customer experience in SaaS-based products may be influenced by others. Thus, performance checks are necessary - to run stress and load tests, QA engineers identify the above limitations of software capability and evaluate its behavior under the expected number of concomitant users.

3. Interoperability testing

SaaS based products perform flawlessly against various browsers and platforms as a prerequisite. Before conducting the interoperability test, the QA team estimates the most preferred browsers and platforms and isolates the browsers used by a few clients to exclude them. With every browser or platform tested, QA specialists cover the full scope of test configuration and provide seamless software operation for a wide range of users.

4. Usability testing

Intending to reduce churn rates and build long-term relationships with end users, companies primarily strive to enhance the customer experience with convenient app usage. By providing simple information architecture, simple workflow and interaction as well as visual readability and adequate feedback on commonly used functions, the individual can satisfy customers through a user-friendly application.

5. Security testing

Within sensitive data, SaaS-based solutions need to enable highly secure storage and disposal of information. Accepting casual accounts and roles, these applications require full validation of access control. To identify vulnerabilities and avoid data breaches, QA experts perform penetration testing, exploring potential barriers.

6. Compliance with requirements

Winning the competition also assumes meeting worldwide standards. Depending on the industry, HIPAA checklists for health products, OWASP security recommendations for any-domain web and mobile applications, GDPR to enable secure data storage and worldwide transfers and much more may be required to conduct software testing.

7. API testing

API testing is required between organizations delivering SaaS products, in conjunction with customer platforms and other third-party solutions. With it, instead of using default user inputs and outputs, QA engineers run positive and negative views of calls on APIs and analyze responses to system interactions. Such an approach allows in advance to ensure that the API application and the calling solution work properly. It focuses primarily on the business logic layer of software architecture.

8. Regression testing

Once the new functionality is implemented, it needs to be verified that the latest improvements have not affected the developed features. Being an elaborate and cumbersome process, the SaaS regression test includes all the test types mentioned above and a range of test cases involving more.

InfyOm has experience delivering comprehensive QA assistance with solid regression testing. Learn how our QA engineers tested and streamlined the software, ensuring the quality of the SaaS platform for public housing authorities.

Summary

Once you decide to build a true bug-free SaaS application, IT strategy needs to add SaaS testing to its specifications that include the use of Wise Cloud resources, prompt updates, multi-tenancy and customization.

By introducing QA tips from the InfyOm list, one can improve the quality of solutions, obtain the required business and operational values, and reduce churning rates.

Harmful Browser Security Threats: How to Avoid Them? -2Testing

Harmful Browser Security Threats: How to Avoid Them? -2Testing

In our previous tutorial, we had seen the most common security threats. Let's see Main Seven Tips and its Recommendations on How You Can Protect Yourself from These Threats are mention below.

1. Saved Login Credentials

It is recommended not to save credentials in the browser. Instead, use password managers like Password Safe and KeePass to store credentials.

Password managers work through a central master password and help you keep your website passwords secure.

You can also set the administrator to access a saved login or URL, depending on your convenience and security reasons.

2. Removable Browsing History

Deleting the browser cache is a way to remove risky information, especially when engaging in confidential activities such as online banking. This step can be performed manually in the browser or set to automatic when the browser is closed. Another way to stay protected from this threat is to use Incognito or Private Browsing mode, where no saves can be harvested.

3. Disable Cookies

The best solution to the threat of cookies is to disable them when using your browser.

However, it is not exceptional, as many websites rely on cookies and thus get limited access to their functionality once they are turned off.

Disabling cookies may also result in annoying prompts. Getting rid of cookies on a periodic basis can help you protect your browser, beware of duplicate information by websites as a side effect of it.

4. Reduce Browser Cache by using Incognito Mode

Protection from such threats can be achieved through incognito browsing as well as by manually clearing the cache as per the requirement, especially after a sensitive browser search.

5. Look for Standard Java Configuration

Java is a widely used language for running Windows and other operating system-related code. It is designed in such a way that the applets inside it run in a separate sandbox environment, which helps prevent them from accessing other operating system components and applications. But more often than not, these vulnerabilities allow small applications to escape from the sandbox environment and cause the threat.

To avoid Java-related threats, search and choose a standard Java security configuration that works best with your browser as well as PC and deploy these configurations through a key source such as Group Policy.

6. Third-Party Plugins or Extensions

Browsers often have third-party add-ons or extensions provided for various tasks, for example, JavaScript or Flash for viewing or working with content. These are both from well-known high-quality dealers, however, there are various modules and add-ons from less legitimate sources and may not, however, offer a business-related benefit. For this type of threat, it is recommended to only allow business-related plugins and extensions as a key aspect of the official business approach, for example, to use the Internet and email. Depending on the browser(s) used in your link, explore ways to whitelist unwanted plug-ins or appropriate plug-ins, so that only those plug-ins can be served. Security modules are arranged for automatic updating or submission of new forms by focused components, (for example, Active Directory Group Policy or System Center Configuration Manager).

7. Ads Popping up and Redirects

Pop-up ads are well-known malicious ads that can be particularly confusing and difficult to work with. They regularly give false notifications, for example, they confirm that PC you have an infection and encourage you to submit their antivirus to activate it. Usually, malware is the thing that really ends up happening. These popups are questionable to close because often there is no X to do it like this.

The best alternative is to close the program completely or use Task Manager in Windows / Execution direction in Linux to close the application.

That's it. If you want to harm-free system, take these tips and apply them to your Web-application. It will help to protect from security threats.

Harmful Browser Security Threats: How to Avoid Them?Testing

Harmful Browser Security Threats: How to Avoid Them?Testing

Web browser, is the most used application or portal for users to access the Internet. These browsers are very advanced, with improved usability and ubiquity. The individual is exposed to different internet browsers. Each of them consists of some perceived and real benefits. However, it is also true that none of them are safe from security threats. In fact, website browsers are more vulnerable to security vulnerabilities and when users interact with websites, they carry the possibilities of malware and other threats in them.

Mainly, 5 most common browser security threats and how to protect your system

With that in mind, here are some of the most common browser security threats and how to protect your system from them are follow below:

1. Removing Saved Login Credentials

Bookmarks associated with saved logins for linked sites is a terrible combination and doesn't really favor your system. When this is done, a hacker with little knowledge can hack it. There are some websites that use two-factor authentication, such as sending OTPs to your mobile phone to access them. However, many of them use this as a one-time access token so that a person can confirm his or her identity on the system they are intended to connect from. Deleting saved credentials is not good for your browser as well as for your system in general. Cybercriminals A can easily reset important identifiers and profiles on almost every website you visit. They can do this from anywhere and at any time. Once they have your IDs and passwords, they can run them from any system of their choice.

2. Permission to Browser History

Your browser's browsing history is a type of map or mechanism that keeps track of what you're doing and what sites you're visiting. It not only tells us which sites you visited, but for how long and when as well. If a criminal wants to get your credentials from the sites you access, they can do so easily, knowing which sites you have accessed through your browsing history.

3. Cookies

Cookies made up of locally stored files that identify association with certain files are another common browser security threat. Similar to browsing history, it can also track the site you visit and get credentials.

4. Browser Cache

Browser cache consists of storing sections of website pages which makes accessing and loading sites easier and faster, every time you visit. This can also identify the site or portal you have accessed and the content you have gone through. It also saves your location and device detection, making it a risky item as anyone can identify your location and device.

5. Autofill Information

Autofill information can pose a huge threat to your browser. Browsers like Chrome and Firefox store your address information, sometimes your profile information, and other personal information. But are you prepared if you fall into the wrong hands? Isn't it? Well, the criminal is now aware of and privacy to all your personal details.

In our next tutorial, will see Tips and Recommendations on How You Can Protect Yourself from These Threats.

How to test reCAPTCHA form - prepare yourself for bots !Testing

How to test reCAPTCHA form - prepare yourself for bots !Testing

Nowadays, people are hacking secure data systems, so will See the security testing criteria for reCAPTCHA forms.

reCAPTCHA is a technology that assesses the probability that the entity that uses your web code (page, app, portal, etc.) is a human and not a bot (or the other way around). Grabbing information of behavior (of a user or a bot) encapsulates it in the token that gets sent to your server. On your server, the token is being sent again to Google for returning the assessment on how probable it is that the token was generated by a human. Part of the response returned from Google to your server:

Let's See the points how to Test 🛠️

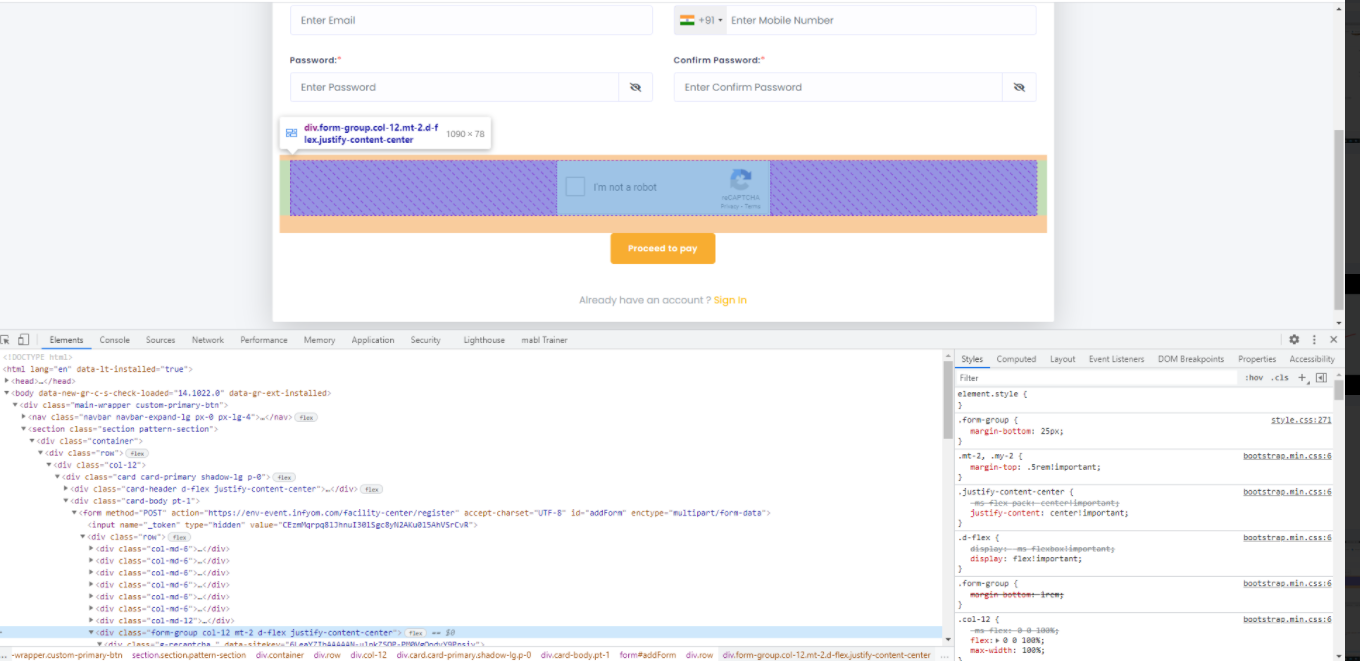

First, we validate from the frontend

On any reCAPTCHA from removing that div from inspect element and then trying to save, there must be valid and records should not store on the backend as shown in the image.

Remove this div then save the form there should be a validation message for reCAPTCHA verification and the form should not be saved, if the form is submitted then the data were stored in the data table which was False to the system.

Now Let's see how we validate from the postman

First, add testing form URL on browser and apply Post method and on body add all fields which are added in form lets see on the image.

Now add on the header at Key column CSRF token, X-Requested, cookie and add its perspective value as shown in the image.

CSRF token and XSRF-TOKEN will store in the cookie which will get from the front page from inspect element.

Now, click on send request and validate the status should be false as shown in the image

If the status changes to true, then the data stored in a table & will create a problem, and the reCAPTCHA form will validate false.

Hence, reCAPTCHA form Test, Hope this helps.

Performance Testing Part-1Testing

Performance Testing Part-1Testing

What is Performance Testing?

Performance testing, which is a non-functional testing method performed to determine system parameters in terms of responsiveness and stability under various workloads. Performance testing measures the quality characteristics of a system, such as a scalability, reliability, and resource use.

Types of Performance Testing

There are mainly six types of performance testing Let's see in detail.

Load Testing

It is the simplest form of testing conducted to understand the behavior of the system under a specific load. The load tests will determine the measurement of important business-critical transactions and will also monitor the load on the database, application server, etc.

Stress Testing

It is performed to find the upper limit capacity of the system and also to determine how the system is operating if the current load greatly exceeds the expected maximum.

Spike Testing

The Spike test is performed by suddenly increasing the number of users by a very large amount and measuring system performance. The main objective is to determine whether the system will be able to carry the workload.

Scalability testing

It Measures performance based on the software's ability to increase or decrease performance measurement attributes. For example, a scalability test could be performed based on the number of user requests.

Volume Testing

Under large test volume no. From. The data is filled in a database and the overall behavior of the program system is monitored. The goal is to check the performance of the software application under different database sizes.

Endurance Testing

It is done to make sure the software can handle the expected load over a long period of time.

We will see full performance testing process points in our next article, to continue...

How to Test Android Applications - part 2Testing

How to Test Android Applications - part 2Testing

In the previous article, we learned 4 cases for how to test Android Applications.

In this article, we will learn more cases for how to test Android Applications.

5. Compatibility testing test cases

Six compatibility test case scenarios questions:

- Have you tested on the best test devices and operating systems for mobile apps?

- How does the app work with different parameters such as bandwidth, operating speed, capacity, etc.?

- Will the app work properly with different mobile browsers such as Chrome, Safari, Firefox, Microsoft Edge, etc.

- Does the app's user interface remain consistent, visible and accessible across different screen sizes?

- Is the text readable for all users?

- Does the app work seamlessly in different configurations?

6. Security testing test cases

Twenty-four security testing scenarios for mobile applications:

- Can the mobile app resist any brute force attack to guess a person's username, password, or credit card number?

- Does the app allow an attacker to access sensitive content or functionality without proper authentication?

- This includes making sure communications with the backend are properly secured. Is there an effective password protection system within the mobile app?

- Verify dynamic dependencies.

- Measures taken to prevent attackers from accessing these vulnerabilities.

- What steps have been taken to prevent SQL injection-related attacks?

- Identify and repair any unmanaged code scenarios

- Make sure certificates are validated and whether the app implements certificate pinning

- Protect your application and network from denial of service attacks

- Analyze data storage and validation requirements

- Create session management to prevent unauthorized users from accessing unsolicited information

- Check if the encryption code is damaged and repair what was found.

- Are the business logic implementations secure and not vulnerable to any external attack?

- Analyze file system interactions, determine any vulnerabilities and correct these problems.

- What protocols are in place should hackers attempt to reconfigure the default landing page?

- Protect from client-side harmful injections.

- Protect yourself from but vicious runtime injections.

- Investigate and prevent any malicious possibilities from file caching.

- Protect from insecure data storage in app keyboard cache.

- Investigate and prevent malicious actions by cookies.

- To provide regular checks for the data protection analysis

- Investigate and prevent malicious actions from custom-made files

- Preventing memory corruption cases

- Analyze and prevent vulnerabilities from different data streams

7. Localization testing test cases

Eleven localization testing scenarios for mobile applications:

- The translated content must be checked for accuracy. This should also include all verification or error messages that may appear.

- The language should be formatted correctly.(e.g. Arabic format from right to left, Japanese writing style of Last Name, First Name, etc.)

- The terminology is consistent across the user interface.

- The time and date are correctly formatted.

- The currency is the local equivalent.

- The colors are appropriate and convey the right message.

- Ensure the license and rules that comply with the laws and regulations of the destination region.

- The layout of the text content is error free.

- Hyperlinks and hotkey functions work as expected.

- Entry fields support special characters and are validated as necessary (ie. postal codes)

- Ensure that the localized UI has the same type of elements and numbers as the source product.

8. Recoverability testing test cases

Five recoverability testing scenarios questions:

- Will the app continue on the last operation in the event of a hard restart or system crash?

- What, if any, causes crash recovery and transaction interruptions?

- How effective is it at restoring the application after an unexpected interruption or crash?

- How does the application handle a transaction during a power outage?

- What is the expected process when the app needs to recover data directly affected by a failed connection?

9. Regression testing test cases

Four regression testing scenarios for mobile applications:

- Check the changes to existing features

- Check the new changes implemented

- Check the new features added

- Check for potential side effects after changes start

That's it. If you want a good application, take these tips and follow cases for Android Application test. It will help to make quality & standardize your Applications.

How to Test Android ApplicationsTesting

How to Test Android ApplicationsTesting

Few main things remember to test an Android Application which is mention below:

1. Functional testing test cases

There are many hands involved in creating a mobile app. These stakeholders may have different expectations. Functional testing determines whether a mobile app complies with these various requirements and uses. Examine and validate all functions, features, and competencies of a product.

Twelve functional test case scenario questions:

- Does the application work as intended when starting and stopping?

- Does the app work accordingly on different mobile and operating system versions?

- Does the app behave accordingly in the event of external interruptions?

- (i.e. receiving SMS, minimized during an incoming phone call, etc.)

- Can the user download and install the app with no problem?

- Can the device multitask as expected when the app is in use or running in the background?

- Applications work satisfactorily after installing the app.

- Do social networking options like sharing, publishing, etc. work as needed?

- Do mandatory fields work as required? Does the app support payment gateway transactions?

- Are page scrolling scenarios working as expected?

- Navigate between different modules as expected.

- Are appropriate error messages received if necessary?

There are two ways to run functional testing: scripted and exploratory.

Scripted

Running scripted tests is just that - a structured scripted activity in which testers follow predetermined steps. This allows QA testers to compare actual results with expected ones. These types of tests are usually confirmatory in nature, meaning that you are confirming that the application can perform the desired function. Testers generally run into more problems when they have more flexibility in test design.

Exploratory

Exploratory testing investigates and finds bugs and errors on the fly. It allows testers to manually discover software problems that are often unforeseen; where the QA team is testing so that most users actually use the app. learning, test design, test execution, and interpretation of test results as complementary activities that run in parallel throughout the project. Related: Scripted Testing Vs Exploratory Testing: Is One Better Than The Other?

2. Performance testing test cases

The primary goal of benchmarking is to ensure the performance and stability of your mobile application

Seven Performance test case scenarios ensure:

- Can the app handle the expected cargo volumes?

- What are the various mobile app and infrastructure bottlenecks preventing the app from performing as expected?

- Is the response time as expected? Are battery drain, memory leaks, GPS, and camera performance within the required guidelines?

- Current network coverage able to support the app at peak, medium, and minimum user levels?

- Are there any performance issues if the network changes from/to Wi-Fi and 2G / 3G / 4G?

- How does the app behave during the intermittent phases of connectivity?

- Existing client-server configurations that provide the optimum performance level?

3. Battery usage test cases

While battery usage is an important part of performance testing, mobile app developers must make it a top priority. Apps are becoming more and more demanding in terms of computing power. So, when developing your mobile app testing strategy, understand that battery-draining mobile apps degrade the user experience.

Device hardware - including battery life - varies by model and manufacturer. Therefore, QA testing teams must have a variety of new and older devices on hand in their mobile device laboratory. In addition, the test environment must replicate real applications such as operating system, network conditions (3G, 4G, WLAN, roaming), and multitasking from the point of view of the battery consumption test.

Seven battery usage test case scenarios to pay special attention to:

- Mobile app power consumption

- User interface design that uses intense graphics or results in unnecessarily high database queries

- Battery life can allow the app to operate at expected charge volumes

- Battery low and high-performance requirements

- Application operation is used when the battery is removed Battery usage and data leaks

- New features and updates do not introduce new battery usage and data

- Related: The secret art of battery testing on Android

4. Usability Testing Test Cases

Usability testing of mobile applications provides end-users with an intuitive and user-friendly interface. This type of testing is usually done manually, to ensure the app is easy to use and meets real users' expectations.

Ten usability test case scenarios ensure:

- The buttons are of a user-friendly size.

- The position, style, etc. of the buttons are consistent within the app

- Icons are consistent within the application

- The zoom in and out functions work as expected

- The keyboard can be minimized and maximized easily.

- The action or touching the wrong item can be easily undone.

- Context menus are not overloaded.

- Verbiage is simple, clear, and easily visible.

- The end-user can easily find the help menu or user manual in case of need.

- Related: High impact usability testing that is actually doable

We will see more points in our next articles.

Bug Life Cycle in Software TestingTesting

Bug Life Cycle in Software TestingTesting

Introduction to Bug Life Cycle

The fault life cycle or defect life cycle is the specific set of states that a fault goes through before it is closed or resolved. When a fault is detected - by a tester or someone else on the team - the life cycle provides a tangible way to track the progress of a bug fix, and during the fault's life, multiple individuals touch it - directly or indirectly. Troubleshooting is not necessarily the responsibility of a single individual. At different stages of the life cycle, several members of the project team will be responsible for the error. This blog will help you understand how many cases the error goes through vary from project to project. The life cycle diagram covers all possible situations.

What’s The Difference Between Bug, Defect, Failure, Or Error?

Bug: If testers find any mismatch in the application/system in the testing phase then they call it a Bug.

As I mentioned earlier, there is a contradiction in the usage of Bug and Defect. People widely say the bug is an informal name for the defect.

Defect: The variation between the actual results and expected results is known as a defect.

If a developer finds an issue and corrects it by himself in the development phase then it’s called a defect.

Failure: Once the product is deployed and customers find any issues then they call the product a failure product. After release, if an end-user finds an issue then that particular issue is called a failure

Error: We can’t compile or run a program due to coding mistakes in a program. If a developer is unable to successfully compile or run a program then they call it an error.

Software Defects Are Basically Classified According To Two Types:

Severity

Bug Severity or Defect Severity in testing is a degree of impact a bug or a Defect has on the software application under test. A higher effect of bug/defect on system functionality will lead to a higher severity level. A Quality Assurance engineer usually determines the severity level of a bug/defect.

Types of Severity

In Software Testing, Types of Severity of bug/defect can be categorized into four parts:

Critical: This defect indicates complete shut-down of the process, nothing can proceed further

Major: It is a highly severe defect and collapses the system. However, certain parts of the system remain functional

Medium:/ It causes some undesirable behavior, but the system is still functional

Low: It won't cause any major break-down of the system

Priority

Priority is defined as the order in which a defect should be fixed. The higher the priority the sooner the defect should be resolved.

Priority Types

Types of Priority of bug/defect can be categorized into three parts:

Low: The Defect is an irritant but a repair can be done once the more serious Defect has been fixed

Medium: During the normal course of the development activities, defects should be resolved. It can wait until a new version is created

High: The defect must be resolved as soon as possible as it affects the system severely and cannot be used until it is fixed

(A) High Priority, High Severity

An error occurs on the basic functionality of the application and will not allow the user to use the system. (E.g. A site maintaining the student details, on saving record if it doesn't allow saving the record then this is a high priority and high severity bug.)

(B) High Priority, Low Severity

High Priority and low severity status indicate defects have to be fixed on an immediate basis but do not affect the application while High Severity and low priority status indicate defects have to be fixed but not on an immediate basis.

(C) Low Priority Low Severity

A minor low severity bug occurs when there is almost no impact on the functionality, but it is still a valid defect that should be corrected. Examples of this could include spelling mistakes in error messages printed to users or defects to enhance the look and feel of a feature.

(D) Low Priority High Severity

This is a high severity error, but it can be prioritized at a low priority as it can be resolved with the next release as a change request. On the user experience. This type of defect can be classified in the category of High severity but Low priority.

Bug Life Cycle

New: When a new defect is logged and posted for the first time. It is assigned a status as NEW.

Assigned: Once the bug is posted by the tester, the lead of the tester approves the bug and assigns the bug to the developer team

Open: The developer starts analyzing and works on the defect fix

Fixed: When a developer makes a necessary code change and verifies the change, he or she can make bug status as "Fixed."

Pending retest: Once the defect is fixed the developer gives a particular code for retesting the code to the tester. Since the software testing remains pending from the tester's end, the status assigned is "pending retest."

Retest: Tester does the retesting of the code at this stage to check whether the defect is fixed by the developer or not and changes the status to "Re-test."

Verified: The tester re-tests the bug after it got fixed by the developer. If there is no bug detected in the software, then the bug is fixed and the status assigned is "verified."

Reopen: If the bug persists even after the developer has fixed the bug, the tester changes the status to "reopened". Once again the bug goes through the life cycle.

Closed: If the bug no longer exists then the tester assigns the status "Closed."

Duplicate: If the defect is repeated twice or the defect corresponds to the same concept of the bug, the status is changed to "duplicate."

Rejected: If the developer feels the defect is not a genuine defect then it changes the defect to "rejected."

Deferred: If the present bug is not of a prime priority and if it is expected to get fixed in the next release, then the status "Deferred" is assigned to such bugs

Not a bug: If it does not affect the functionality of the application then the status assigned to a bug is "Not a bug".

Defect Life Cycle Explained

-

The tester finds the defective status assigned to the defect.

-

A defect is forwarded to the project manager for analysis.

-

The project manager decides whether a defect is valid.

-

Here the defect is invalid. The status is "Rejected".

-

The project manager assigns a rejected status.

- If the bug is not resolved, the next step is to check that it is in scope.

Suppose we have another function - email functionality for the same application, and you find a problem with it. However, it is not part of the current version if such errors are assigned as a deferred or deferred status.

-

Next, the manager checks to see if a similar error has occurred earlier. If so, a duplicate status is assigned to the error.

-

If not, the bug is assigned to the developer, who starts correcting the code.

-

During this phase, the defect is assigned a status in the process,

-

Once the code is fixed. A defect is assigned a status fixed.

-

Next, the tester tests the code again. If the test case is passed, the defect is closed. If the test cases fail again, the bug is reopened and assigned to the developer.

- Consider a situation where, during the first release of the flight reservation, an error was detected in the fax order, which has been fixed and the status of closed has been assigned. The same error occurred again during the second upgrade version.

In such cases, a closed defect is opened again.

"That's all to Bug Life Cycle"